Search and Retrieval Augmented Generation (RAG)

TellusR is a strong search and Retrieval Augmented Generation (RAG) module in your architecture.

Improve relevance and performance

The TellusR AI search comes with a RAG Dashboard that allows you to tune the LLM context, tune the prompt and integrate with your preferred LLM through a GUI.

In this context TellusR AI search will do Retrieval Augmented Generation (RAG). This is an important technique improving the performance and relevance of generative AI models.

Generative AI with higher accuracy

AI Search and generative AI are highly complementary, in fact a good search is the foundation of a working AI.

When using a Generative AI for company specific purposes, RAG will search through the internal data sources and provide the most contextually relevant results to the generative AI application.

When applying the Generative AI application on the contextually relevant search results, the end results will be presented with higher accuracy than for standalone Generative AI or conventional chat bots. This is why TellusR is the first step in your AI strategy.

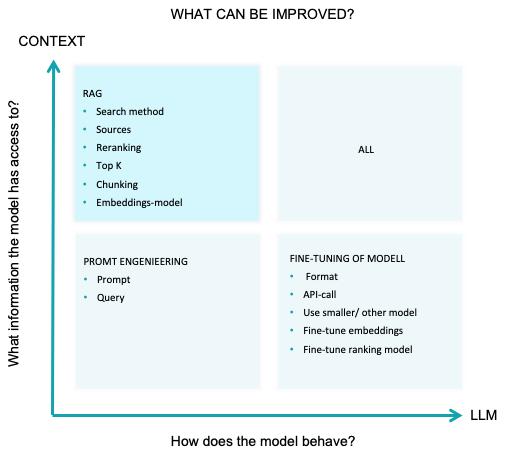

What can be improved?

TellusR makes it easy to integrate it with your preferred LLM and to change it or run several in parallel. ChatGPT was the best known LLM in 2023, but there are plenty of others available.

They have different capabilities, like speed, domain, quality and cost levels. In 2025 you consider several in your set up. TellusR or one of our partners can also help you evaluate which one is most suitable for your needs and implement it accordingly.

No, you don’t need the biggest language model

You need the one that’s right for the job you’re trying to solve. Here’s why.

GPT-4, Claude Opus, Gemini 1.5, LLaMA 3, Mistral, Granite, NorLLM, RWWK.

New language models are flooding in, each with more capabilities and catchy names. Every time a new one drops, two things happen: it sounds like yet another master’s degree just became obsolete overnight, and it’s introduced as “better.” Faster. Smarter.

Public agencies, healthcare providers, exporters — even small businesses — are all exploring generative AI. Boardrooms everywhere are feeling the pressure.

Amid all this excitement, it’s easy to fall for the idea that there’s a single “best” language model — the one you assume “everyone else” must be using.

But language models aren’t a one-and-done choice. It’s about preserving your freedom to choose — at every step.

The model you choose decides whether you stay flexible — or get locked in

It’s tempting to think one big language model can handle everything. Like a digital Swiss Army knife ready to take on emails, case management, reports, customer service, and front-line inquiries.

But the reality is often the opposite:

The more you funnel your needs through a single model, the less flexible you become. As Andreas Horn at IBM puts it: “There is no ‘best’ model—only the best one for your use case.”

Which language model you use isn’t just a technical decision; it’s increasingly a business decision.

Flexibility isn’t only about how tasks get done. It’s about something more pressing: if you lock into a single model, that’s exactly what happens — you’re locked in. What if you need a model that can do something different? What if external circumstances change, and your chosen model is no longer available?

In practice, many AI processes already use multiple models — each handling different steps: reframing questions, retrieving data, analyzing structures, generating outputs. As AI workflows become more complex, flexibility becomes mission-critical. You need to use the model that fits each step best. There is no universal model that can do everything for everyone.

That’s why it’s valuable to have a safety switch. So you can change course, if you need to.

The three factors that shape the right LLM choice

When the pressure to adopt AI is high, it can be tempting to default to the biggest, most well-known model.

Understandable. But the model you choose doesn’t just shape the answers you get. It shapes where your data flows, which infrastructure you need to support, how dependent you become — and what it will cost you.

To make a genuinely strategic choice, you need to ask a different question: What model fits the problem we’re trying to solve — in our sector, with our data, within our requirements for security, resources, and reliability?

This question forces you to navigate three key decisions. These aren’t just about technology, but about priorities, risk, and your operational architecture.

Or, as Andreas Horn at IBM clarifies, you need to think along three axes:

- Generalist or specialist?

Large models like GPT-4, Claude Opus, and Gemini 1.5 Pro are designed for broad tasks. They are excellent generalists, but not always the best for your specific needs. Specialized models—like Mistral, IBM Granite, or industry-specific SLMs—can be simpler, cheaper, and more precise. Not “smarter” in themselves, but smarter for you.

- Heavyweight or lightweight?

Big models provide deeper reasoning but require more compute resources. They’re slower and more expensive—and oversized for many everyday tasks. Think of a heavy truck: powerful, but slow and costly to run. A smaller model is like a delivery van: fast, agile, and practical for where work actually happens. Models like LLaMA 3 or Mistral 7B can run on simpler hardware, deliver faster responses, and lower your costs. For many use cases, that’s progress—not a downgrade.

- Open or closed?

Closed models offer strong performance but limited transparency. You get answers, but do you know how they’re generated? Where does your data go? Who has access? Can it be used to train new models? What terms will apply tomorrow? Open models provide transparency, control, and the option to run solutions yourself. This offers more than technological freedom—it gives you agency in a world that changes fast.

Choosing the right LLM is a strategic decision that shapes how AI will serve your organization — today and tomorrow.

Your architecture should let you swap models

One thing is which model you choose for a task. But that may not be your most important decision.

The critical question is whether your architecture allows you to swap models — without having to rebuild from scratch. At TellusR, we care about the AI infrastructure that will become part of the digital backbone of Norway — and the world.

We’ve developed a modern switchboard — a safety switch, if you will. The AI Communications Hub lets you maintain control over the models you use.

It’s a control center that connects language models with your internal and external data streams, allowing you to build the exact solution you need — without locking yourself into a single vendor, dataset, or cloud provider.

The results?

- Flexibility in model choice — now and tomorrow

- Control over data flows — inside and outside your organization

- Security — even when circumstances change

This is how you stay in control — no matter how the AI landscape evolves.

Norwegian language needs solutions designed for Norwegian realities

If you work in municipalities, the public sector, or export industries, English-only language models and generic solutions aren’t enough. You need models that:

- Understand Norwegian language and tone

- Recognize Norwegian contexts and terminology

- Can run on Norwegian infrastructure — if and when required

We see growing ambition from initiatives like The National Library of Norway and NorwAI, working to make open Norwegian models available.

We also collaborate with partners like Epinova, ACOS, and FDVhuset, who help public and private organizations adopt AI in ways that work — technically, legally, and operationally. In Norway. Right now.

This isn’t about isolating everything locally. It’s about building systems that work on your terms.

So, which language model should you choose?

The right model for your organization is the one that balances power with cost.

You need to balance the breadth of large models with the precision of smaller, more efficient ones, while balancing control with ease of use..

Most importantly, it should give you the ability to switch — whenever you need to.

When you connect your organization to language models, you’re also connecting to an ecosystem of consequences. You need to know what you’re plugging into, and you need to be able to change direction. You also need enough ownership of your architecture and infrastructure to do it on your terms. If you want to adopt AI in a safe, efficient, and future-proof way, you don’t necessarily need the biggest model.

You need a system that lets you use the one that’s right.

Curious? Let’s talk.

Message our CEO Morten Krogh-Moe and see what the right AI setup could look like for you:

morten@tellusr.com

Phone: + 47 905 31877

TellusR AS

www.tellusr.com

Customer story: Find relevant information in many and complex documents

Fritzøe Engros is one of Norway’s largest building materials companies. The company uses TellusR’s AI-based search and chat technology to make product information more accessible.

Book a demo

Need help growing your business? Contact us today to discuss how we can help you. Fill out the contact form or call us and one of our experts will be in touch with you shortly. Let's get started!

"*" indicates required fields